Picking up where we left off yesterday, the AMA’s Spring Break survey has problems other than the disclosure of its methodology. We must also consider the misleading reporting of results from the survey that were based on less than the full sample.

I should make it clear that in discussing this survey, I in no way mean to minimize the public health threat arising from the reckless behavior often in evidence at the popular spring break destinations. Of course, one need not look to spring break trips to find an alarming rate of both binge drinking and unprotected sex among college age adults (and click both links to see examples of studies that meet the very highest standards of survey research).

MP also has no doubt that spring break trips tend to increase such behavior. Academic research on the phenomenon is rare, but eleven years ago, researchers from the University of Wisconsin-Stout conducted what they explicitly labeled a “convenience sample” of students found, literally, on the beach at Panama City Florida during spring break. They found, among other things that 92% of the men and 78% of the women they interviewed reported participating in binge drinking episodes the previous day (although they were also careful to note that students at other locations or involved in other activities other than sitting on the beach may have been different than those sampled).

In this case, however, the AMA was not looking to break new “academic” ground but to produce a “media advocacy tool.” The apparent purpose, given the AMA’s longstanding work on this subject was to raise alarm bells about the health risks of spring break to young women. The question is whether these “media advocacy” efforts went a bit too far in pursuing an arguably worthy goal.

Also as noted yesterday, the survey got a lot of exposure in both print and broadcast news and the television accounts tended to focus on the “girls gone wild” theme. For example, on March 9, the CBS Early Show’s Hannah Storm cited “amazing statistics” showing that “83% of college women and graduates admit heavier than usual drinking and 74% increased sexual activity on spring break.” On the NBC Today Show the same day, Katie Couric observed:

57% say they are promiscuous to fit in; 59 percent know friends with multiple sex partners during spring break. So obviously, this is sort of an everybody’s doing it mentality and I need to do it if I want to be accepted.

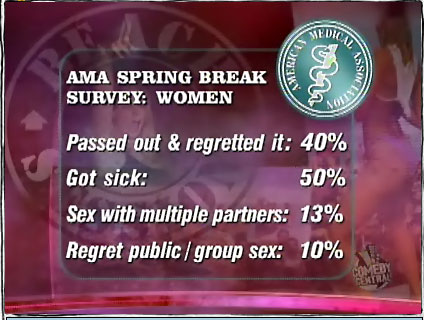

And most of the local television news references I scanned via Nexis, as well the Jon Stewart Daily Show graphic reproduced below, focused on the most titillating of the findings in the AP article: 13% reported having sex with more than one partner and 10% said they regretted engaging in public or group sexual activity.

But if one reads the AP article carefully, it is clear that the most sensational of the percentages were based on just the 27% of women in the sample who reported having “attended a college spring break trip:”

Of the 27 percent who said they had attended a college spring break trip:

- More than half said they regretted getting sick from drinking on the trip.

- About 40 percent said they regretted passing out or not remembering what they did.

- 13 percent said they had sexual activity with more than one partner.

- 10 percent said they regretted engaging in public or group sexual activity.

- More than half were underage when they first drank alcohol on a spring break trip.

The fact that only about a quarter of the respondents actually went on a spring break trip — information missing from every broadcast and op-ed reference I encountered — raises several concerns. First, does the study place too much faith in second-hand reports from the nearly three quarters of the women in the sample who never went on a spring break trip? Second, how many of those who reported or heard these numbers got the misleading impression that the percentages involved described the experiences of all 18-34 year old women? See the Hannah Storm quotation above. She appears to be among the misled.

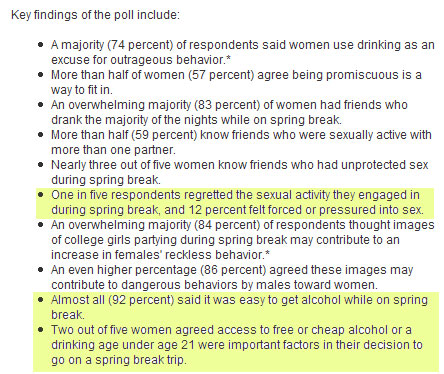

One might think that the press release from the AMA would have gone out of its way to distinguish between questions asked of the full sample and those asked of the smaller subgroup that had actually been on a spring break trip. Unfortunately, not only did they fail to specify that certain percentages were based on a subgroup, they also failed to mention that only 27% of their sample had ever taken a spring break trip. Worse, the bullet-point summary of results in their press release mixes results for the whole sample with results based on just 174 respondents, a practice that could easily confuse a casual reader.

[Highlighting added]

So what can we make of all this?

Consider first the relatively straightforward issues of disclosure and data reporting. In this case, the AMA failed to indicate in their press release which results were based on the full sample and which on a subgroup. Their press release also failed to indicate size of the subgroup. Both practices are contrary to the principals of disclosure of the National Council of Public Polls. Also, as described yesterday, their methodology statement at first erroneously described the survey as a “random sample” complete with a “margin of error.” It was actually based on a non-random, volunteer Internet panel. In correcting their error — two weeks after the data appeared in media reports across the country — they expunged from the record all traces of their original error. In the future, anyone encountering the apparent contradiction between the AP article and the AMA release might wrongly conclude that AP’s reporter introduced the notion of “random sampling” into the story. For all of this, at very least, the AMA owes an apology to both the news media and the general public.

The issues regarding non-random Internet panel studies are less easy to resolve and worthy of further debate. To be sure, pollsters and reporters need to disclose when a survey rely on something less than a random sample. But aside from the disclosure issue, difficult questions remain: At what point do low rates of coverage and response so degrade a random sample as to render it less than “scientific?” And is there any yardstick by which non-random Internet panel studies can ever claim to “scientifically” project the attitudes of some larger population? In the coming years, the survey research profession and the news media will need to grapple with these questions.

For now, MP agrees with those troubled by the distinctions made by the AMA official (as quoted in yesterday’s post) that between “academic research” and “a public opinion poll:”

[T]his was not academic research — it was a public opinion poll that is standard for policy development and used by politicians and nonprofits.”

Apparently I need to reiterate that this is not an academic study and will be published in any peer reviewed journal; this is a standard media advocacy tool

I agree that the release of data into the public domain demands a higher standard than what some campaigns, businesses and other organizations consider acceptable for strictly internal research. With an internal poll, the degree of separation between the pollster and the data consumer is small, and the pollster is in a better position to warn clients about the limitations of the data. Numbers released into the public domain, on the other hand, can easily take on a life of their own, and data consumers are more apt to reach their own conclusions absent the pollster’s caveats. Consider the excellent point made by MP reader and Political Science Professor Adam Berinsky in a comment earlier today:

Why should anyone accept a lower standard for a poll just because the results are not sent to a peer-reviewed journal? If anything, a higher-standard needs to be enforced for publicly disseminated polls. Reviewers for journals have the technical expertise to know when something is awry. Readers of newspapers don’t and they should not be expected to have such expertise. It is incumbent on the providers of the information to make sure that their data are collected and analyzed using appropriate methods.

Finally, I put a question to Rutgers University Professor and AAPOR President Cliff Zukin that is similar to one left earlier this afternoon by an anonymous MP commenter. I noted that telephone surveys have long had trouble reaching students, a problem worsening as adults under 30 are more likely to live in the cell phone only households that are out of reach of random digit dial telephone samples. Absent a multi-million dollar in-person study, bullet-proof “scientific” data on college students and the spring break phenomenon may be unattainable. If the AMA had correctly disclosed their methodology and made no claims of “random sampling,” would it be better for the media to report on flawed information than none at all?

Zukin’s response was emphatic:

Clearly here flawed information is worse than none. And wouldn’t the AMA agree? What is the basic tenet of the Hippocratic Oath for a physician: First, do no harm.

Would I rather have no story put out than a potentially misleading one suggesting that college students on spring break are largely drunken sluts? Absolutely. As a college professor for 29 years, not a question about it. This piece is extremely unfair to college-aged women.

And the other question here is one of proper disclosure. Even if one had but limited resources to study the problem, the claims made in reporting the findings have to be considerate of the methodology used. I call your attention to the statement they make in the email that the main function of the study was to be useful in advocacy. Even if I were to approve of their goals, I believe that almost all good research is empirical in nature. We don’t start with propositions we would like to prove. And the goal of research is to be an establisher of facts, not as a means of advocacy.

I’m not naive or simplistic. I don’t say this about research that is either done by partisans or about research that is never entered into the public arena. But the case here is a non-profit organization entering data into the public debate. As I said to them in one of my emails, AAPOR can no more condone bad science than the AMA would knowingly condone bad medicine. It’s really that simple

As always, contrary opinions are welcome in the comments section below. Again, I emailed the AMA and their pollster yesterday offering the opportunity to comment on this story, and neither have responded.

Wow, that’s gruesome.

The line between “flawed information” and misinformation is subject to debate, but I know which side _I_ think that press release came down on.

I am trying to imagine whether the AMA would apply similarly lax standards to other human subjects research and analysis — provided, I suppose, that it was in the service of “media advocacy.” Sigh.

I recall a number of years ago the AMA put out a widely reported study that purported to show mortality differences between left- and right-handed people. Soon after, the Society of Actuaries had thoroughly debunked the study and exposed the flawed methodology.

Perhaps the AMA should just stick to curing sick people.

I agree 100% with Zukin’s response above. It’s no excuse to say, “Doing a valid study would have been too hard, so we did a crappy one. But that’s OK because it was all in the name of advocacy.” A purportedly scientific organization should practice proper scientific methodology and should not present blatantly flawed data. Period.

Just out of curiosity, why do so many people think that the AMA is a “scientific” organization? It’s not, it’s an advocacy group for physicians. I doubt that anyone at the AMA has practised medicine in many years, if ever. They’re certainly not scientists.

That the AMA would publish such distorted information is just par for the course these days. Professionalism is just a word. Truth, ethics and morality play no part in the meaning of the word.

This is fascinating stuff. I’d like to highlight two points – one, promoting the perception that, as Mr. Zukin succinctly put it, “college students on spring break are largely drunken sluts,” reinforces for some young women that this is “normal” behavior – that “everyone is doing it” – and they should too if they want to “fit in.” So I really don’t know what advocacy goal the AMA thought it was pursuing by going to the mass media – a more targeted release of (accurate) data to college and university presidents would have been more strategic. After all, the “news” that this sort of thing goes on during Spring Break has already been delivered by MTV, etc.

Second, as the former director of a political program at a women’s health advocacy organization, I frequently encountered the cynicism of reporters who felt all advocates make up the facts to fit their particular agendas. We always used professional public opinion researchers with impeccable integrity, but the facts barely stood a chance against the posture that “we insiders know how things *really* work in Washington.” Its disgusting that the AMA would reinforce this (mistaken) belief.

I’m not surprised by this scandal. The AMA, like other medical organisations (e.g., the CDC, and the New England Journal of Medicine) has long used bogus statistical studies to “show” that guns should be outlawed. When reading detailed debunkings of some of them it is clear that dishonesty, not just incompetence, is behind the methodology (e.g., arbitrarily using selected subsets of the readily available data). Doctors are useful people to have around when you’ve been shot, but they’re not experts on the causes of crime and violence — let alone unbiased ones — any more than they are unbiased experts at such poll-taking.

MP:

I’m disappointed that you seem to go easy on the media’s role in this. More and more news outlets have hired omsbudsmen in recent years. They also need to add someone to filter polls and ensure they’re described acurately (if they get used at all).

I remember during the 2004 prez debates how all the networks touted their “online surveys” shortly after the event was over. Up until that time I was one who regularly participated in online polls…usually finding them through right leaning websites. During the debates, however, it became apparent to me and other conservatives that groups like MoveOn.org were gaming the online surveys. We stopped participating altogether. Soon thereafter the surveys began producing results like this: “92% of survey respondents believe Kerry won the debate!”. The usual pablum was mumbled by the host that the survey was non-scientific. But the results quickly became part of the echo chamber. On the conservative sites we had a good laugh laying down bets on just how high the numbers would go.

That’s just a small example of how the media allows itself to be manipulated. They have a responsibility to police themselves and set some kind of standard as to which polls will be included in their coverage and how they will be described. Please don’t let them off the hook for swallowing whole any old press release disguised as a “poll”.

Ping Pig wrote:

‘Just out of curiosity, why do so many people think that the AMA is a “scientific” organization? It’s not, it’s an advocacy group for physicians. I doubt that anyone at the AMA has practised medicine in many years, if ever. They’re certainly not scientists.’

Note that I referred to the AMA as a “purportedly” scientific organization. The degree to which they are not (consistently) scientific is evident to a knowledgeable person. However, in the public eye, they do represent medical science — e.g., they publish a journal which everyone has heard of consisting largely of valid scientific studies. And their rank-and-file member practice the applied science of medicine.

As such, there is a greater expectation for the validity of their studies than there is for, say, studies produced by MoveOn.org.

First, I moved here from the UK at age 26 and am very jealous that I never got to go on spring break in Ft Lauderdale, or whereever. But I’ll get over that eventually….after all I not too far from “senior” break in the same region and Viagra will be generic by then!

Second,and less smuttily, the key problem here is that the reporting (starting with the AMA release, and ending up on The Daily Show which is what I actually saw) ignored the most important fact. Only 26% of those polled went on “Spring break”. This meant that the college students who, say, volunteered at soup kitchens were not counted. When I saw the Daily Show numbers (10% had group sex or whatever) I knew they were wrong, and that’s why. For those who had sex with multiple partners, it’s 13% of 26% or less than 3%, and with a sample of 600 in total it’s a useless number!

Given that there’s a huge amount of self-selection in going on a spring break trip, it’s now like conducting a study on pot-smoking at a Greatful Dead show and extrapolating from those numbers to all 18-35 year olds.

I am though a little peturbed by Cliff Zukin lumping all internet polling in the same bracket. If you just put up an “insta poll” on your web site, of course it’s BS. If it comes from a pre-selected panel, and it’s controlled for bias by weighting it to known characteristics about the population, it’s bascially OK. George Trehanian at Harris Interactive (where I used to work) spent a lot of time on this methodology, even though I haven’t followed up the methodology recently it showed pretty decent results (e.g. they were closer to the 2000 election resluts than the telephone polls). And of course the accessing kids over the phone is equally tricky and goes for everyone. None of my 3 most recent housemates had a land line and they are all in their 30s.

And frankly I have no problem believing that 50% of the colege age kids in Ft Lauderdale/Lake Havasu/whereever, are drinking to excess. It’s the basic reason they went there!

And finally, what a bunch of puritan fuss from the AMA. Young people have been blowing off steam in this way since time began, and we still seem to be surviving as a civilization (or at least our problems seen mostly to be caused by middle aged puritans, who these kids will probably turn into).

Of course perhaps if the AMA wasn’t sending us head-fakes like this, we might look more closely at their 100 year history of screwing over the American health care system anytime universal health care becomes a political possiblity.

Mr. Cliff Zukin wrote, “Even if I were to approve of their goals, I believe that almost all good research is empirical in nature. We don’t start with propositions we would like to prove. And the goal of research is to be an establisher of facts, not as a means of advocacy.”

In an ideal world, yes. In the real world, a motive must exist for research to take place. Someone must perceive:

1) an event, state, fact, or phenomenon,

which in turn suggests

2) a theory, hypothesis, or proposition,

which focuses attention on the subject, and which generates both

3) the desire to investigate further and possibly

4) the funds needed to do a rigorous study.

I may be wrong – but I’m probably wrong less than half of the time – however, you probably know of statistically significant studies that originated from a theory, hypothesis, or proposition in far greater number and importance than of such studies which arrived at a thesis only after undirected, open-ended observation and research.

The latter type, unpredisposed studies, will likely become even less common as funding for pure research continues to become less available.

Mr. Cliff Zukin wrote, “Even if I were to approve of their goals, I believe that almost all good research is empirical in nature. We don’t start with propositions we would like to prove. And the goal of research is to be an establisher of facts, not as a means of advocacy.”

In an ideal world, yes. In the real world, a motive must exist for research to take place. Someone must perceive:

1) an event, state, fact, or phenomenon,

which in turn suggests

2) a theory, hypothesis, or proposition,

which focuses attention on the subject, and which generates both

3) the desire to investigate further

and possibly

4) the funds needed to do a rigorous study.

I may be wrong – but I’m probably wrong less than half of the time – however, you probably know of statistically significant studies that originated from a theory, hypothesis, or proposition in far greater number and importance than of such studies which arrived at a thesis only after undirected, open-ended observation and research.

The latter type, unpredisposed studies, will likely become even less common as funding for pure research continues to become less available.